New Developments in AI

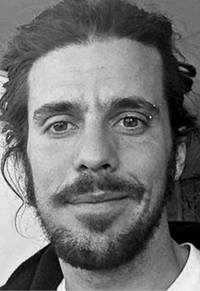

Nikete Nicolás Della Penna

Florian Glatz and AI researcher Nikete (Nicolás Della Penna) talk about new developments in AI, training data and computers judging legal cases.

FG – Florian Glatz

NI – Nikete (Nicolás Della Penna)

FG: Nikete, you are an AI researcher and you have been researching at MIT for a number of years.

NI: I have worked with an AI research group at MIT for a number of years and I have been an affiliated with them since last year. The unifying theme of my Ph.D. thesis is this study of how to make better decisions, be it from past decisions or be it from the input of many experts. Especially when you are not forcing decision-makers to take a specific position, but when you are advising them, and they are ultimately the ones that decide. So, for example, the doctor in the medical system decides what to give to each patient. When the doctor then checks what the patients actually did, you can call this compliance awareness. So, the agents might or